Key Takeaways

- AI is not inherently objective; it inherits and can amplify human biases from its training data.

- The misconception of AI as a neutral calculator is dangerous and overlooks its human-created origins.

- Addressing bias in AI requires understanding that it's a reflection of societal issues embedded in the data.

Ethical Automation: How to Avoid Bias in Your AI Outputs

Artificial intelligence is often presented as a purely objective, data-driven tool. We imagine it as a neutral calculator, free from the messy, irrational biases that cloud human judgment. But this is a dangerous misconception. The reality is that AI models are not born in a vacuum; they are created by humans and trained on vast amounts of human-generated data. And in doing so, they inherit, reflect, and can even amplify our own societal biases and prejudices.

This isn't a theoretical, dystopian problem. AI bias is having real-world consequences today. An AI-powered hiring tool was found to penalize resumes that included the word "women's," because it had been trained on historical data from a male-dominated industry. An AI used to predict creditworthiness has been shown to offer lower credit limits to individuals in minority neighborhoods, even when their financial profiles were identical to those in wealthier areas.

For a small business using AI for marketing, HR, or customer service, ignoring the risk of bias is not an option. An unintentionally biased AI can lead to discriminatory marketing campaigns, a less diverse workforce, and significant damage to your brand's reputation. Ethical automation requires a conscious and proactive effort to identify and mitigate bias. This guide will explain how AI bias occurs and provide practical steps you can take to ensure your use of AI is fair, equitable, and responsible.

Where Does AI Bias Come From?

AI bias isn't (usually) the result of a programmer with malicious intent. It's a systemic problem that typically originates from one of two sources:

1. Biased Training Data: This is the most common cause. AI models learn by analyzing massive datasets. If that data reflects historical inequalities or stereotypes, the AI will learn those biases as facts.

- Example: If an AI is trained on decades of data about corporate executives, a group that has historically been predominantly white and male, it may learn to associate the characteristics of white males with the concept of "successful executive." It might then down-rank equally qualified female or minority candidates in a recruitment task.

2. Flawed AI Algorithms or Human Oversight: Sometimes, the way the AI is designed or how its output is interpreted can introduce bias.

- Example: An algorithm designed to maximize clicks on a job advertisement might learn that it can get more clicks by showing high-paying tech job ads primarily to men, reinforcing a stereotype and creating a discriminatory feedback loop.

Understanding that bias is often an unintentional byproduct of the data is the first step toward combating it.

Practical Steps to Promote Fairness and Mitigate Bias

You don't need to be an AI ethicist to make a difference. By incorporating these practices into your workflows, you can significantly reduce the risk of your AI perpetuating harmful biases.

1. Be Conscious of the Data Source

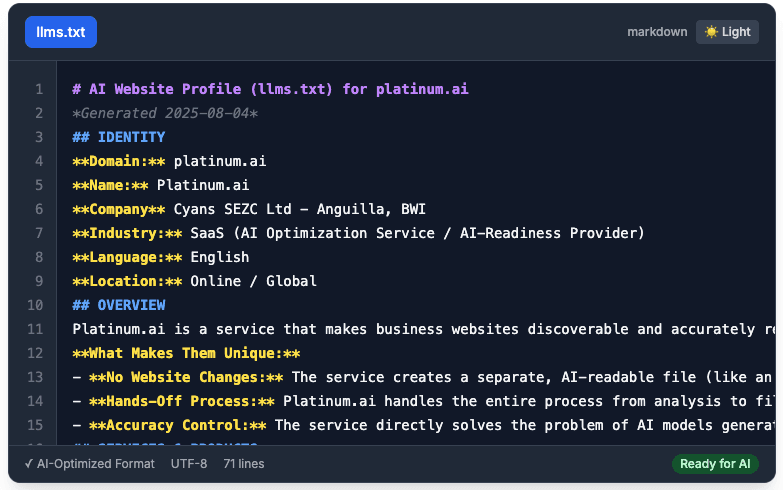

Always remember: the AI you are using (like ChatGPT) was trained on a massive, unfiltered snapshot of the public internet. The internet is filled with biases, stereotypes, and problematic content. You must assume that the AI's 'worldview' is inherently skewed and treat its outputs with a healthy dose of skepticism.

2. Engineer Your Prompts for Neutrality

The way you phrase your prompt can have a huge impact on the output. You can actively guide the AI toward a more neutral and inclusive response.

-

Bad Prompt (invites bias): "Write a job description for a strong, aggressive sales leader."

- The AI might associate these gender-coded words with male candidates and produce a biased description.

-

Good Prompt (promotes neutrality): "Write a job description for a sales leader. The ideal candidate should be results-driven, an excellent communicator, and a skilled negotiator. Use gender-neutral language and focus on skills and qualifications."

When using AI to generate marketing personas or ad copy, explicitly instruct it to consider a diverse range of ages, genders, ethnicities, and backgrounds. For example: "Generate three different customer personas for my coffee shop. Ensure they represent a diversity of ages and professions."

3. Implement Rigorous Human Review (Especially for High-Stakes Tasks)

This is the most critical safeguard. An AI should never be the final decision-maker in any process that significantly affects people's lives or opportunities. This is the 'human-in-the-loop' model.

- For HR and Recruiting: Use AI to help you draft a job description or to perform an initial screen of resumes for key qualifications. But a diverse group of human beings must be responsible for reviewing the shortlisted candidates and making the final hiring decision. Never let an AI automatically reject a candidate.

- For Marketing Campaigns: When using AI to generate ad copy or imagery, a human must review the output to ensure it is inclusive and not unintentionally offensive. An AI trained on biased data might generate an image of a 'doctor' that is always a white man. It is the human's job to catch this and correct it, either by regenerating the image with a more inclusive prompt or choosing a different image altogether.

4. Test for Bias

If you are using an AI for a repeatable task, you can actively test its outputs for bias.

- The 'Flip Test': Give the AI a prompt and then give it the same prompt with a key demographic detail changed. For example:

- Prompt 1: "Assess this resume for a software engineer role. The candidate's name is John."

- Prompt 2: "Assess this resume for a software engineer role. The candidate's name is Priya." If the AI's assessment of the exact same resume is noticeably different based on the perceived gender or ethnicity of the name, you have identified a bias in the model.

5. Provide Feedback and Choose Your Tools Wisely

- Use the 'Feedback' Button: Most AI tools have a thumbs-up/thumbs-down feedback mechanism. If you receive an output that you believe is biased, report it. This helps the AI companies identify and correct issues in their models.

- Scrutinize Specialized Tools: If you are considering a specialized AI tool for a task like hiring or credit scoring, you must demand transparency from the vendor. Ask them directly: "What steps have you taken to identify and mitigate bias in your model? Can you provide documentation on your fairness audits?"

Ethical automation is not an accident; it's a choice. It requires a commitment to awareness, diligence, and continuous improvement. By acknowledging that AI is a mirror that reflects the data it's shown, we can take on the crucial responsibility of cleaning that mirror. For any business, building a reputation for fairness and inclusivity is not just good ethics—it's good business. And in the age of AI, that's more important than ever.