Key Takeaways

- AI assistants and Google's crawlers interpret website content differently, shifting the focus from traditional SEO tactics.

- Historically, website optimization centered on pleasing Google's crawlers through keywords, backlinks, and meta descriptions.

- The emergence of AI assistants necessitates a new approach to website content strategy beyond established SEO practices.

Why AI Assistants Can’t 'Read' Your Website Like Google Does

For twenty years, the rules of the digital game were clear: make your website great for Google. You learned about keywords, backlinks, and meta descriptions. You focused on creating content that would please the Google crawler, helping it understand what your site was about so it would rank you higher in the search results. You got good at Search Engine Optimization (SEO).

Now, a new player has entered the field: the AI assistant. And this new player follows a completely different set of rules. When a user asks Siri, Alexa, or ChatGPT a question, the AI's goal isn't to provide a list of websites; it's to provide a single, direct, factual answer. This fundamental difference in purpose means that the way these AIs "read" the internet is radically different from how Google's crawlers do it.

Failing to understand this distinction is one of the biggest blind spots for businesses today. A website perfectly optimized for traditional search can be nearly invisible to an AI assistant. This article will break down why this is the case by explaining the core difference between a search index and a knowledge graph—the two competing ways of organizing the world's information.

The Old Way: Google and the Web Index

Think of the internet as a colossal, disorganized library with billions of books (websites) and no central librarian. When Google started, its brilliant innovation was to create a comprehensive index of this library.

Here’s how it works:

- Crawling: Google sends out automated bots, called crawlers or spiders, that travel across the web by following links from one page to another.

- Indexing: As they crawl, they analyze the content on each page—the text, the headings, the image alt-tags. They take note of which keywords are used and how often.

- Ranking: They also analyze the relationships between pages. A link from a highly respected site (like the New York Times) to your site acts as a vote of confidence, boosting your authority (this is the basis of PageRank).

When you type a search query, Google doesn't search the live internet. It searches its own pre-built index. It looks for the pages that best match your keywords and have the most authority, and then it presents them to you as a list of ten blue links.

In this model, your prose, your blog posts, and your clever marketing copy are all valuable. The crawler reads this content to understand what your site is about. A beautiful banner image with the text "Summer Sale" might not be readable, but if the words "Summer Sale" are in your H1 heading, the crawler sees it. This is the world of SEO.

The New Way: AI and the Knowledge Graph

AI assistants operate on a different principle. Their goal is not to point you to a book in the library; it's to read all the books, synthesize the information, and give you the single best answer. To do this, they rely on what's called a knowledge graph.

An index is a list of documents and the keywords they contain. A knowledge graph is a network of entities and the relationships between them.

- An entity is a specific thing: a person (Tom Hanks), a place (Chicago), an event (Super Bowl LIX), or a business (Your Company Inc.).

- A relationship defines how these entities connect: Tom Hanks starred in Forrest Gump. Chicago is located in Illinois. Your Company Inc. is open from 9 AM to 5 PM.

An AI assistant doesn't "read" the prose on your homepage to guess your business hours. It queries the knowledge graph with a specific question: "What is the 'hours' relationship connected to the 'Your Company Inc.' entity?" If that relationship exists as a verified fact in the knowledge graph, the AI can state it with confidence. If it doesn't, the AI has to fall back on guessing by trying to parse your website text—a process that is slow, unreliable, and often inaccurate.

A Practical Example: The Sale Banner

- Your Website: You have a prominent banner at the top of your homepage. It's a beautiful image with text that says: "Huge Summer Sale! July 1st - July 31st!"

- Google's Crawler Sees: If you were smart about SEO, you also have an H1 tag that says

<h1>Huge Summer Sale</h1>. The crawler indexes your page under the keywords "summer sale." When someone searches for that, your site might appear. - The AI Assistant Sees: The AI can't reliably read the text in your image. Even if it sees the H1 tag, it doesn't understand the meaning. It sees a string of words. A user asking, "When does the summer sale at [Your Business] end?" presents a challenge. The AI has to try and parse the sentence "July 1st - July 31st!" and infer that the end date is July 31st. This is prone to error.

How Structured Data Fixes This:

With structured data (like Schema.org), you would add invisible code to your page that explicitly defines the sale as an event entity:

<div itemscope itemtype="https://schema.org/SaleEvent">

<h1 itemprop="name">Huge Summer Sale</h1>

<meta itemprop="startDate" content="2025-07-01">

<meta itemprop="endDate" content="2025-07-31">

</div>

Now, you have provided a clear, machine-readable fact: endDate = 2025-07-31. You have fed the knowledge graph. When the user asks the question, the AI doesn't have to guess. It can query for the endDate property of the SaleEvent entity and give a perfect, confident answer. Your business becomes a reliable source of truth.

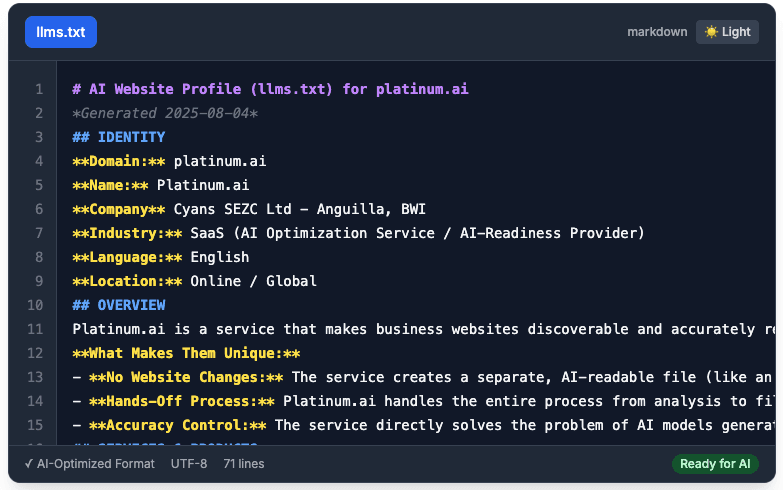

Your Website Is Now a Database for AIs

This shift requires a fundamental change in how you think about your website. For two decades, your website's primary audience was human, with the Google crawler as an important secondary audience. Today, you have a third, equally important audience: AI assistants.

This new audience doesn't care about your beautiful design, your clever branding, or your flowing prose. It is a ruthlessly pragmatic audience that cares about one thing only: clear, accurate, verifiable facts.

Optimizing for this audience is the essence of AI Optimization (AIO). It means going beyond just having information on your website and moving toward having information structured within your website. It's the only way to ensure that when a customer has a conversation with an AI about a business like yours, your name is the one that comes up.

To be found in the age of AI, you can no longer just publish books for the library. You must also provide a perfect, detailed card for the catalog. Because in this new world, if you're not in the knowledge graph, you might as well be invisible.